반응형

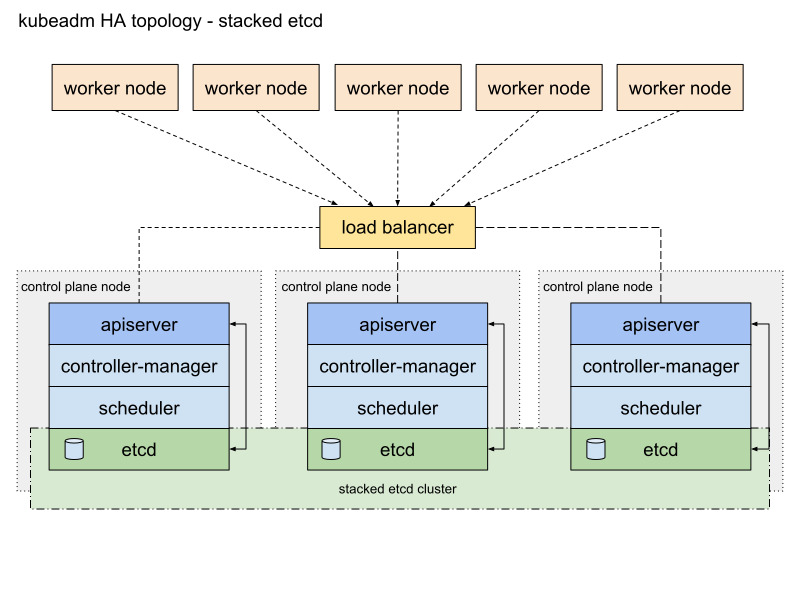

쿠버네티스 클러스터(Kubernetes Cluster)를 멀티 마스터(Multi Master) 환경에서 고가용성(Highly Available)을 구현하는 방법

쿠버네티스 클러스터를 멀티 마스터 환경에서 고가용성(HA)을 구현하는 것은 클러스터의 Control Plane을 여러 마스터 노드로 분산하여 단일 장애 지점을 없애는 중요한 작업입니다. 이를 통해 클러스터의 신뢰성과 가용성을 높일 수 있습니다.

| Hostname | IP Address | Application | |

| k8s-lb1 | 192.168.0.130 | haproxy | |

| k8s-master1 | 192.168.0.131 | kubelet kubeadm kubectl | |

| k8s-master2 | 192.168.0.132 | kubelet kubeadm kubectl | |

| k8s-master3 | 192.168.0.111 | kubelet kubeadm kubectl |

1. 로드 밸런서 설정

로드 밸런서 : 클러스터 API 서버에 대한 요청을 분산시키기 위해 로드 밸런서를 설정합니다. 모든 마스터 노드의 API 서버 IP를 로드 밸런서에 등록합니다.

더보기

---

sudo add-apt-repository -y ppa:vbernat/haproxy-3.0sudo apt-get install haproxy=3.0.\*sudo systemctl --now enable haproxysudo vim /etc/haproxy/haproxy.cfg# HAProxy configuration file

global

log 127.0.0.1 local0

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

# Kubernetes API Server Frontend

frontend kubernetes

bind 0.0.0.0:6443

mode tcp

option tcplog

default_backend kubernetes-master-nodes

# Backend for Kubernetes Master Nodes

backend kubernetes-master-nodes

mode tcp

option tcp-check

balance roundrobin

server master1 192.168.0.131:6443 check fall 3 rise 2

server master2 192.168.0.132:6443 check fall 3 rise 2

server master3 192.168.0.111:6443 check fall 3 rise 2

# Statistics interface

listen stats

bind 192.168.0.130:8888

mode http

stats enable

stats uri /

stats refresh 10s

stats realm HAProxy\ Statistics

stats auth admin:adminsudo haproxy -c -f /etc/haproxy/haproxy.cfgsudo systemctl status haproxy --no-pager -l---

2. 모든 노드에서 사전 준비 작업

더보기

---

패키지 설치 및 환경 설정

sudo apt-get update && sudo apt-get install -y apt-transport-https ca-certificates curl

KUBERNETES_VERSION="v1.27"

sudo mkdir -p -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/${KUBERNETES_VERSION}/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/${KUBERNETES_VERSION}/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectlSwap 비활성화

swapoff -a방화벽 설정

ufw allow 6443/tcp # API 서버 포트

ufw allow 2379:2380/tcp # etcd 포트

ufw allow 10250/tcp # Kubelet 포트

ufw allow 10251/tcp # kube-scheduler 포트

ufw allow 10252/tcp # kube-controller-manager 포트

ufw allow 30000:32767/tcp # NodePort 포트NTP 설정

apt-get install -y ntp

systemctl start ntp

systemctl enable ntp---

3. 첫 번째 마스터 노드에서 클러스터 초기화

Kubernetes 클러스터 초기화

sudo kubeadm init --pod-network-cidr=10.244.0.0/16 \

--control-plane-endpoint 192.168.0.130:6443 \

--upload-certs | tee $HOME/kubeadm_init_output.log- <LOAD_BALANCER_DNS> : API 서버에 접근할 수 있는 로드 밸런서의 DNS 또는 IP

- <PORT> : 기본 포트는 6443입니다.

root@k8s-master1:~$ sudo kubeadm init --pod-network-cidr=10.244.0.0/16 \

--control-plane-endpoint 192.168.0.130:6443 \

--upload-certs | tee $HOME/kubeadm_init_output.log

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.0.130:6443 --token tpzq96.7yui4p69lt5ntg47 \

--discovery-token-ca-cert-hash sha256:2a884163bc08947a762f57918bde830c2381a45ca193591b76eb637fb99a6e58 \

--control-plane --certificate-key 36243f5d802e993f97f35652113701a1d1dc7ae4cae4118820b45ea45b8a52a6

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.130:6443 --token tpzq96.7yui4p69lt5ntg47 \

--discovery-token-ca-cert-hash sha256:2a884163bc08947a762f57918bde830c2381a45ca193591b76eb637fb99a6e58kubectl 설정

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config4. 추가 마스터 노드에서 조인

추가 마스터에서 조인

kubeadm join 192.168.0.130:6443 --token tpzq96.7yui4p69lt5ntg47 \

--discovery-token-ca-cert-hash sha256:2a884163bc08947a762f57918bde830c2381a45ca193591b76eb637fb99a6e58 \

--control-plane --certificate-key 36243f5d802e993f97f35652113701a1d1dc7ae4cae4118820b45ea45b8a52a6- <TOKEN> : 첫 번째 마스터에서 생성한 토큰

- <HASH> : 인증서 해시

- <CERTIFICATE_KEY> : 인증서 키

root@k8s-master2:~$ kubeadm join 192.168.0.130:6443 --token tpzq96.7yui4p69lt5ntg47 \

--discovery-token-ca-cert-hash sha256:2a884163bc08947a762f57918bde830c2381a45ca193591b76eb637fb99a6e58 \

--control-plane --certificate-key 36243f5d802e993f97f35652113701a1d1dc7ae4cae4118820b45ea45b8a52a6

...

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.kubectl 설정

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config5. 클러스터 상태 확인

클러스터 상태 확인

kubectl get nodes$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane 50m v1.27.16

k8s-master2 Ready control-plane 47m v1.27.16

k8s-master3 Ready control-plane 46m v1.27.16kubectl get pods --all-namespaces$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5d78c9869d-52g7m 0/1 Running 0 51m

kube-system coredns-5d78c9869d-qhhpr 0/1 Running 0 51m

kube-system etcd-k8s-master1 1/1 Running 19 51m

kube-system etcd-k8s-master2 1/1 Running 232 48m

kube-system etcd-k8s-master3 1/1 Running 2 47m

kube-system kube-apiserver-k8s-master1 1/1 Running 11 51m

kube-system kube-apiserver-k8s-master2 1/1 Running 233 48m

kube-system kube-apiserver-k8s-master3 1/1 Running 39 47m

kube-system kube-controller-manager-k8s-master1 1/1 Running 126 (48m ago) 51m

kube-system kube-controller-manager-k8s-master2 1/1 Running 231 48m

kube-system kube-controller-manager-k8s-master3 1/1 Running 40 47m

kube-system kube-proxy-8dh8z 1/1 Running 0 51m

kube-system kube-proxy-mr67h 1/1 Running 0 48m

kube-system kube-proxy-xss5p 1/1 Running 2 47m

kube-system kube-scheduler-k8s-master1 1/1 Running 121 (48m ago) 51m

kube-system kube-scheduler-k8s-master2 1/1 Running 219 48m

kube-system kube-scheduler-k8s-master3 1/1 Running 39 47m

참고URL

- Kubernetes Documentation : Creating Highly Available Clusters with kubeadm

- Kubernetes Documentation : Kubernetes Components

- Kubernetes Documentation : Options for Highly Available Topology

- Kubernetes Components : 포트와 프로토콜

728x90

반응형

'리눅스' 카테고리의 다른 글

| etcd 클러스터를 구성하는 방법 (0) | 2024.07.23 |

|---|---|

| 우분투에 etcd를 설치하는 방법 (0) | 2024.07.22 |

| 우분투에서 동일한 물리적 인터페이스에 여러 IP 주소를 설정하는 방법 (0) | 2024.07.22 |

| 쿠버네티스 대시보드를 통해 Nginx를 배포하는 방법 (0) | 2024.07.22 |

| 쿠버네티스 대시보드에 Kubeconfig 파일을 사용하여 인증하는 방법 (0) | 2024.07.22 |